Understanding Database Options Governing Statistics Creation

Why are statistics so critical? Because without this insight into how your data is distributed in your tables and indexes, the SQL Server Query Optimizer (QO) would not have any understanding of how your data is distributed.

I recently spoke at IT/Dev Connections on the subject of distribution statistics for Microsoft SQL Server. It’s my opinion the subject is important enough to devote time to content covering statistics.

Why Are Statistics Vital to SQL Server?

Why are statistics so critical? Because without these statistics – which provide insight into how your data is distributed in your tables and indexes – the SQL Server Query Optimizer (QO) would not have any understanding of how your data is distributed, thus it won't know what operations are best suited (and most efficient) for processing your queries. This would result in execution plans created by the QO consistently using scans of indexes and tables and inefficient join decisions that would render the entire platform painful to use.

What are Distribution Statistics?

Statistics represent the distribution of the data within a column or columns. The QO stores statistics for a table or indexed view in a statistics object. For a table, the statistics object is created on either an index or a list of table columns. The statistics object is comprised of three components: a header with metadata about the statistics, a histogram with the distribution of values in the first key column of the statistics object, and a density vector to measure cross-column distinctiveness of values which is also referred to as cardinality. The Database Engine can compute cardinality estimates with any of the data in these statistics objects.

How are Statistics Used?

The query optimizer uses statistics to determine how to satisfy your SQL query based upon the distribution of data values to estimate how many rows will be returned from each operation in a query plan and also to select which query plan operation is appropriate along the way for such things as whether a table/index seek is more appropriate for selecting data versus a table/index scan or whether a hash join is better than a merge join for combining data sets from different operations along the path of your query plan for example. If the QO can’t determine if a given predicate in a search or join has a high cardinality (is very unique compared to the full domain of values) then improper joining as consistent use of scans of full tables or indexes would always be the go-to operation for such portions of execution plans. With a knowledge of cardinality values the QO can utilize lower-cost operations to return results faster to those sending requests to the database.

How Are Statistics Created?

Statistics are either created automatically – depending on database-level settings and through administrative actions such as creating or rebuilding indexes, or manually as you see fit. When an index is created or rebuilt SQL Server will take the time to either create or update the existing statistics. The act of creating or rebuilding an index requires all the pages of the table or index to be read so this is the perfect time for the SQL Server engine to get an idea of the data distribution and so in the most complete way possible – with a full scan of the data.

Additionally, there are database-level settings that control how statistics are automatically created and updated. Those settings are: Auto Create Statistics, Auto Update Statistics, and Auto Update Statistics Asynchronously. They are set via ALTER DATABASE commands as shown below:

ALTER DATABASE [database_name] SET AUTO_CREATE_STATISTICS ON|OFF;

GO

ALTER DATABASE [database_name] SET AUTO_UPDATE_STATISTICS ON|OFF;

GO

ALTER DATABASE [database_name] SET AUTO_UPDATE_STATISTICS_ASYNC ON|OFF;

GO

Each one of these database options are described below.

AUTO_CREATE_STATISTICS Explained

When set to “ON”, AUTO_CREATE_STATISTICS allows for automatic creation of statistics on columns used for search or join predicates where none currently exist for a given column. This means that if there isn’t an existing statistics object created either when an index was created or statistics created through manual intervention then SQL Server will create the necessary statistics as part of building a query execution plan as part of the overhead of plan generation. This also will occur if the column involved is part of a multi-column statistic but is not the leading column as the leading column of an index receives “special attention” over the remaining columns that may follow. We will discuss more about the different attention leading and non-leading columns get as we proceed further through this series of articles on distribution statistics in SQL Server. I want to reiterate this creation event will occur as part of plan generation and does add to the overhead of generating the plan. This setting is ON by default.

AUTO_UPDATE_STATISTICS Explained

When set to “ON”, AUTO_UPDATE_STATISTICS allows for automatic updating of statistics by SQL Server when the existing statistics are considered out of date by the query engine. The process/overhead of statistics updates will occur when the associated column(s) are required as part of the next query being executed after SQL Server identifies the stats are out of date. How this occurs is through a modification counter that keeps track of the number of changes made in an object associated with a statistics object over time (since the last creation or update event, whichever occurred most recently.) The default behavior, up until the release of SQL Server 2016, was as follows as it pertains to statistics being marked as out of date:

On tables with no rows, statistics will be marked for updating the first time a row is inserted.

On tables with 1-500 rows, statistics will be marked for updating when the leading column in the statistics object has encountered more than 500 updates since last time the stats were updated.

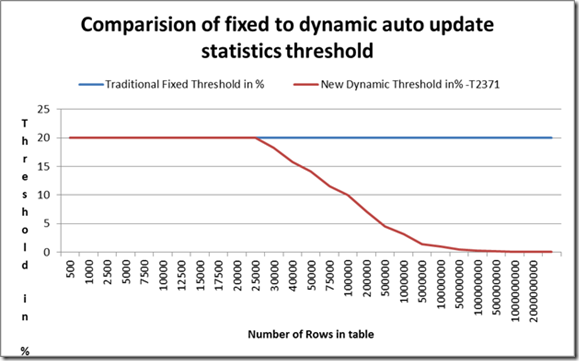

On tables with more than 500 rows, statistics will be marked for updating when the leading column in the statistics object has encountered more than 500 updates + 20% of the row count of the columns have also been updated since last time the stats were updated.

You can possibly see why the last situation isn’t conducive to maintaining relatively current statistics on larger tables: the requirement that 20% of column values + 500 is a threshold that gets harder to obtain as a table grows. Since SQL Server 2008 SP1, using trace flag 2371, you could override this behavior to make that 20% threshold more dynamic (read “smaller”) the larger the table in terms of row count as the following image demonstrates:

Image courtesy of Microsoft: https://blogs.msdn.microsoft.com/saponsqlserver/2011/09/07/changes-to-automatic-update-statistics-in-sql-server-traceflag-2371/

As of SQL Server 2016 this trace flag’s behavior is the new standard for automatic statistics updating and TF2371 no longer needs to be employed. As is the case with AUTO_CREATE_STATISTICS, the statistics meeting the standard of being out of date will be updated as part of building a query execution plan. Consider the updating of the statistics in this fashion to implicitly occur synchronously with the generation of the execution plan. This setting is ON by default.

AUTO_UPDATE_STATISTICS_ASYNC Explained

When set to ON, AUTO_UPDATE_STATISTICS_ASYNC overrides the synchronous behavior of updating statistics automatically to allow for asynchronous updating. Essentially what this means is that stats updates are no longer beholden to occur during the execution plan creation phase but rather as SQL Server sees fit. The tradeoff from decoupling the overhead of statistics updates with execution plan generation is that you may end up with outdated statistics being utilized to craft an execution plan which may (or may not) impact performance. If values changed in the subset of data associated with the statistics object, but not so that it alters then plan then this is of no issue since the same plan would have been used if the stats were updated as part of the query plan generation. However, if the changes altered whether a seek was to be used versus a scan for example, you may impact performance negatively in selecting to decouple the statistics updating from plan generation. This setting is OFF by default.

What Comes Next

This was an introduction to the statistics and how their behavior is guided at the database level. The next article in this series digs deeper into what the automatic statistics creation process looks like and how to query for basic statistics information.

About the Author(s)

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)

_(1).png?width=700&auto=webp&quality=80&disable=upscale)

.png?width=700&auto=webp&quality=80&disable=upscale)