Q&A: US Patent and Trademark Office's CIO on Cloud and DevSecOps

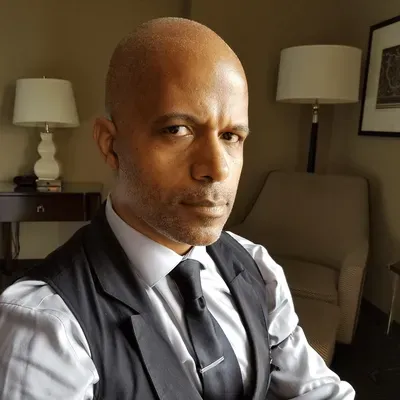

Jamie Holcombe talks about developing a "software factory" drawing upon DevSecOps methodology and GitLab to help it modernize software development within his agency.

Even the federal agency that puts its stamp on new, original innovations has had to update its infrastructure using DevSecOps for a cloud-based world.

United States Patent and Trademark Office dealt with a software system outage in 2018 that disrupted the patent application filing process and exposed a need for more effective data recovery. The downing of the Patent Application Locating and Monitoring system, which tracks progress in the patent process, along with other legacy software applications, helped prompted changes at the federal agency.

Jamie Holcombe, the USPTO's Chief Information Officer, spoke with InformationWeek about taking advantage of modern resources such as GitLab along with DevSecOps methods to improve time delivery on IT updates, shifting to the cloud, and improving resiliency.

What was happening at the USPTO that drove the changes you made? What was the pain point?

What was the burning platform? Why did you have to jump off into the sea because everything around was just going to hell? Well, what had happened was, before I even arrived at the agency, the Patent and Trademark Office experienced an 11-day outage where over 9,000 employees could not work.

Why couldn't they work? They were using old, outdated applications — which is okay; everybody uses old apps — but they didn't practice on how to come back and be resilient. When they took out those backup files to lay over the top and bring the database up, they didn't know how, and they failed not once but twice.

The third one they were able to lay over the top and got back continuity of operations. The only problem was it was 9 petabytes of information and they had failed to back up the indices of the database. So, it took over eight days to rebuild the indices. That's a lesson learned.

That was the burning platform. Then there were a lot of complaints by the business that IT was slow and "You can never deliver on the new stuff."

About the Authors

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)