How to Troubleshoot a SQL Server 2016 Migration

Why is it important to note the distinction between Enterprise and Enterprise Core editions of SQL Server? Because server utilization depends on which one you install.

I was involved with a client in a migration of a production environment from SQL Server 2012 to SQL Server 2016. The dozen servers involved were provisioned months and weeks prior to the migration event, and the plan - tested repeatedly with great success - was as follows:

1. Employ log shipping to keep the various servers in sync right up until the point of migration.

2. Remove the ability for users to connect.

3. Perform all necessary cutover tasks on both the data platform and application tier.

4. Take and apply a final log backup.

5. Upgrade the compatibility setting to 2016 (130).

6. Perform some routine tests, then finally let everyone back into the environment.

The actual process took a little more time – it always does when you’re dealing with real connections that don’t want to complete or roll back – but everything went swimmingly otherwise.

Then we started to notice issues with worker threads when the environment was back up to peak load. Something was missed that was not caught with full load applied to the environment. I'm going to share what we found, how we found it, and how we fixed it.

How to Notice Symptoms of Something Overlooked

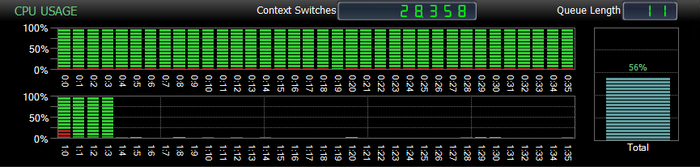

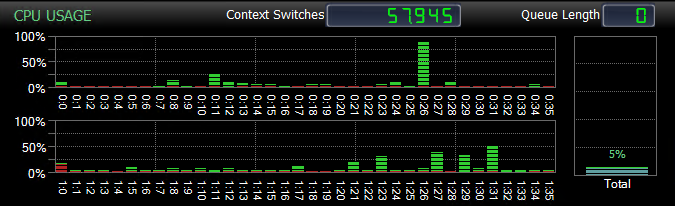

Soon after the all-clear was given to let users back into the SQL server environment, we began noticing issues related to high CPU, yet we were only hitting around 50% of available CPU according to our monitoring software. What was also strange was that the CPU load didn’t appear to be borne equally across all 72 cores on the server. That led to a NUMA imbalance:

As you can see from the above image, this server was consuming almost 100% CPU on the 40 cores that were in use, but the remaining 32 cores were virtually untouched. If you do the math, the 56% measured CPU is across all cores, whether utilized or not. But the server was 100% utilized for the 40 cores it was able to use.

The question is why was SQL Server only using 40 cores when the server had 72 at its disposal?

We started looking for more information. For me, “more information” when SQL Server is involved usually means hitting the Dynamic Management Objects (or DMOs for short.) In order to get further insight into how the cores were “seen” by SQL Server I employed a query against the sys.dm_os_schedulers DMO to check counts grouped by status:

SELECT OS.status, COUNT(OS.status)

FROM sys.dm_os_schedulers AS OS

GROUP BY OS.status

ORDER BY OS.status;

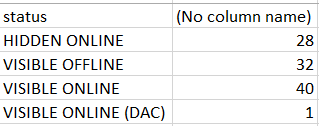

Here were the results, which were not encouraging:

Exactly what did this mean? At a pure metadata level, what the results told us was that we had 40 schedulers that were able to be utilized by the SQL Server service (VISIBLE ONLINE) but still had additional schedulers that were not accessible in their current state. These were comprised of HIDDEN schedulers are used to service system-level workloads and are never accessible to end-user requests – the counts for HIDDEN ONLINE were acceptable and expected by the definition of their classification, as well as the singular VISIBLE ONLINE (DAC) schedulers is reserved for the dedicated administrator connection (DAC). This leaves the remaining 32 schedulers listed as VISIBLE OFFLINE that should be accessible to end-user workloads, but were not. A VISIBLE OFFLINE scheduler is one that, though addressable, is not allowed to act on SQL Server workloads for at least one reason. CPU throttling such as this was not something I had encountered in my 20 years as a SQL Server DBA, nor as a technical educator, or MVP.

The interesting thing is that this same issue was being observed across all servers that were employed in the upgrade to SQL Server 2016: All were core-limited at 40 hyper-threaded cores, meaning 20 cores were in use. It was around that time a few individuals all seemed to identify a blog post from a fellow Data Platform MVP, John Morehouse. It was in his post that he mentions running across a similar issue in SQL Server 2012 related to licensing.

At the heart of the matter, this lack of server utilization was occurring because the incorrect edition of Microsoft SQL Server 2016 was installed. Since it’s highly likely that the same install package was used for all servers involved it explained why all servers were experiencing the same issue. Incorrect edition? Let me explain.

Two Possible Install Options for Enterprise Edition

There were likely two issues that led to the incorrect option of Enterprise Edition SQL Server 2016 being installed:

Changes in licensing for SQL Server implemented in 2012.

The Microsoft penchant for using similar names/acronyms for different technologies/features.

In 2012, Microsoft altered the licensing approach for SQL Server to switch to a core-based license. This led to a forking of options for installs of SQL Server to have both a CAL-based license as well as a per-core license. Licensing in and of itself is a very deep topic and is outside the scope of this article, but it does have impact in that there were/are two different options for install executables as a result of this licensing change.

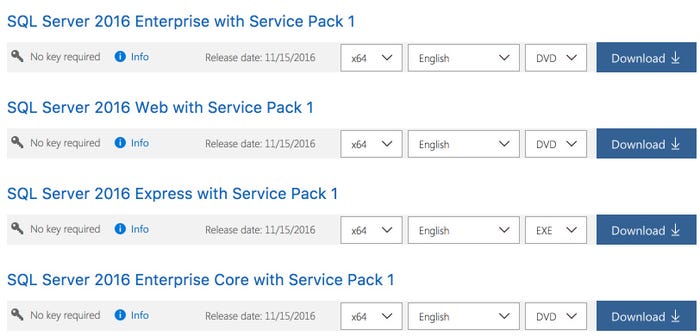

Additionally, Microsoft has a history of re-purposing acronyms and terms across different products (and in the case of the DAC acronym within the same product.) In this case there was likely confusion around the term “core”. This is because there is also a “Server Core” version of Microsoft Windows that installs a non-GUI version of Windows that SQL Server can be installed on. The install process is quite different from the standard process since there is no GUI capability on the server core version of Windows Server. (The process for installation on Windows Server Core is outlined here.) I could easily see when the following options are presented that whomever chose the bits for install mistook the “Core” edition for SQL Server 2016 as a version designed for Windows Server Core and not as “core” pertains to SQL Server – that of which is a definition of core licensing.

From the above options taken from the Microsoft MSDN download page you could see how that misconception could arise.

Why am I belaboring the subject of Enterprise v. Enterprise Core editions of SQL Server? Because if you chose the version that is not specifically labeled “Core” you’re installing a Server+CAL licensed SKU of SQL Server that is limited to (you guessed it) 20 cores – 40 if hyper-threading is involved.

Now it becomes obvious where the 40 VISIBLE ONLINE schedulers are originating while 32 remain non-addressable even though they’re visible. It’s because licensing is preventing those cores from being enlisted into service. First impressions are one of horror: thoughts of having to go through an entire emergent migration to hardware that is already in use to get to the “correct” SKU of SQL Server. Imagine those thoughts and all the frantic thoughts that follow it and you have an idea of initial state of those involved in the migration.

However, there is a better and easier way for fixing this licensing issue, one that was both aided and hindered by the underlying high availability architecture we put into place as part of the upgrade and migration: Distributed Availability Groups (DAGs).

Distributed Availability Groups Complicated Matters

Availability Groups are advancements on the high availability and disaster recovery (HA/DR) concepts of both Database Mirroring and Server Clustering.

An Availability Group (or AG) is comprised of two or more servers (“replicas”) that are nodes in a Windows Server Failover Cluster (WSFC) and do not share storage resources like a typical WSFC does.

Instead, AGs use the WSFC as a foundation for high availability of the O/S by allowing multiple servers from “owning” the SQL Services but since shared storage isn’t involved in this architecture (where a clustered instance of SQL Server is hosted on shared storage) this architecture involves multiple copies of a database that is part of an AG rather than a single copy, just like Database Mirroring. Unlike Database Mirroring however, any of these replicas of the database are readable, while one is both readable and writeable. I’m definitely over-simplifying the architecture of Availability Groups, but the point is, this is an architecture where a clustered set of servers acting together to host SQL Server is employed.

This side discussion about AGs is relevant because -- just like a clustered instance of SQL Server -- any fix has to be first applied to the secondary (non-primary) replica/instance/node, followed by a failover to change ownership so the formerly primary node can then have the same fix applied. The fix we had to employ to resolve the throttling of CPU had to be applied to both servers in the AG… and there were three AGs.

There is a common tagline to many television commercials. “But wait! There’s more!” and in the case of this architecture there was more because these were not just Availability Groups these were Distributed Availability Groups.

What are Distributed Availability Groups (or DAGs?) They’re an Availability Group of Availability Groups that are employed to allow for geo-dispersed AG replicas across data centers This allows for such things as site DR/redundancy. In an AG, you must be concerned about latency between each replica. AGs, like Database Mirroring, can be configured to be either synchronous or asynchronous. The first requires any transaction on the primary replica to be hardened on the secondary replica before it’s committed on the primary replica. The later assumes that there will be eventual consistency between the replicas and removes the two phase commit requirement from the architectural configuration.

Even with asynchronous committal there are still stringent needs for low latency in the queues that serve as the conduit between the two replicas. That is hard to accomplish with geographically separated replicas, so instead of allowing for an AG with “remote” replicas, there is the DAG. In a DAG you end up with two AGs – one per data center. At any given time a single AG “owns” the DAG and in that primary AG, there is a primary replica that owns the AG and, by default, the associated DAG. At the minimum you’ll have four replicas where one is primary and three are secondary. Now the fix changes from having to be applied to one instance of SQL Server if this was a standalone environment to two instances because of AGs to now four instances because these are DAGs. Finally, multiply that by three separate DAGs due to internal structuring of the SQL Server environment and this changes from one fix to twelve. Now you may have further insight into why a complete rebuild of a production system in the middle of a migration was not an option to pursue.

The Fix

The fix for this issue ends up being much less impactful than was originally feared and stems from the same process that allows for version upgrades from Standard Edition to Enterprise Edition that is integrated into the SQL Server installation wizard. It’s possible to upgrade out of the Server + CAL licensing into per-core licensing with a single command line statement. The process is as follows:

Download the correct version of the install files.

Mount the .iso for the installation and launch setup.exe.

Identify the PID – another name for that that long security key combination of letters, hyphens, and numbers.

Cancel out of the install.

Run cmd as Administrator

Navigate to the directory where the setup.exe file is for your downloaded and mounted media

Substitute that PID value into the command below:

Setup.exe /q /ACTION=editionupgrade /INSTANCENAME=MSSQLSERVER /PID=XXXXX-XXXXX-XXXXX-XXXXX-XXXXX /IACCEPTSQLSERVERLICENSETERMS

Hit enter and wait. The process runs through an edition upgrade which is a metadata change but still can take a few minutes and cause even the most patient DBA some concern. When the command prompt reappears it’s done.

Unmount the mounted .iso file and clean up unnecessary files.

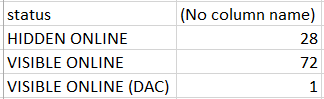

This command switches the licensing model and frees up the VISIBLE OFFLINE schedulers effectively removing the cap on CPU throttling:

As you can also see this fixed our misaligned NUMA:

I ended up having to perform this first against the secondary AG’s secondary replica, then failover the secondary AG and run the same command against the former primary replica. Next it was the matter of doing that same process on the AG that owned the DAG. The process does cause the SQL Services to restart as part of the fix but since I was not patching the primary replica at any given time there was no impact to the end users beyond what occurs with a standard AG failover.

In Conclusion

Since then the upgrade has been highly successful. Moving from SQL Server 2012 to 2016 has led to less latency, better overall performance, and the ability to start utilizing the Query Store and other features available in SQL Server 2016. Now it’s time for them to start planning their migration to SQL Server 2017!

About the Author

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)