Microsoft Details How It's Building AI Cloud Infrastructure

At the Build 2024 conference, Microsoft Azure's CTO described how the cloud giant is building out its infrastructure for AI demands.

How is Microsoft building out its own cloud infrastructure to support the growing demands of AI? That's a question that Microsoft Azure CTO Mark Russinovich answered in a session at this week's Microsoft Build 2024 conference.

AI demand has required Microsoft to build out a massive AI infrastructure to power the next generation of large language models (LLMs). Russinovich said Microsoft has scaled its AI infrastructure by 30 times since November 2023. That scale isn't just about more GPUs and servers — it's also about more cables and back-end infrastructure to support that massive scale.

"Efficiency is the key to the game," Russinovich said. "If you take a look at what's happening, to challenge the ability for us to be efficient, you can see that the sizes of these frontier models have continued to grow, basically, exponentially."

Where Microsoft Is Innovating Cloud AI Infrastructure

The rapid growth of model sizes is driving exponential increases in GPU performance, memory capacity, and power consumption. Russinovich said the latest Nvidia GPU with all its high-bandwidth memory and all its transistors consumes 1,200 watts, just for one GPU.

"We cannot push enough air through our data centers to cool these kinds of systems," he admitted.

The solution is liquid cooling, with Microsoft's custom Maya racks being the first deployments of liquid cooling in the Azure data center.

"Maya is our first step toward the new design of data centers in the cloud," he said. "Maya is a liquid-cooled system."

Beyond cooling, Microsoft is also innovating in a few cloud infrastructure areas to support larger scale. One such area is power oversubscription, where Microsoft is optimizing its power usage.

Another area is high-speed networking, where Microsoft is running the InfiniBand interconnect, instead of Ethernet, to get better scale and performance. Additionally, Microsoft has built out its own custom storage accelerators to help get data in and out of models quickly.

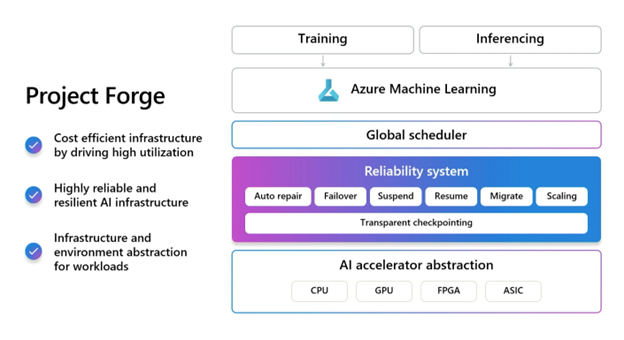

Project Forge Is Microsoft's AI Workload Platform

At the software layer, Microsoft has built Project Forge — an internal AI workload platform that treats all GPUs globally as an elastic resource pool.

Source: Microsoft

"The idea with one pool is everybody gets a virtual GPU, not physical GPUs," Russinovich explained. "If a premium job comes in and there's a low priority job running on the GPUs it needs, low priority gets evicted."

Project Forge allows high utilization by reassigning resources, Russinovich said. So far, the results have been positive, with Microsoft getting high levels of utilization for its infrastructure resources, he said.

"If you take a look in aggregate across all of Microsoft, for all of our first-party training, we're getting over 95% utilization," Russinovich said.

About the Author

You May Also Like

.jpg?width=700&auto=webp&quality=80&disable=upscale)