For decades, only academics, government scientists, and researchers working for large companies, such as the ones designing sports cars or searching for oil fields, have had access to the world’s fastest computing systems.

But cloud providers are changing that, broadening access to supercomputers beyond those exclusive groups. The trend is driven to a large extent by the rapid adoption of machine learning.

Today at SC19, the supercomputing conference being held in Denver, Nvidia and Microsoft announced an Azure cloud supercomputer service anyone can use, spinning it up the same way anyone can spin up a regular x86 server in the cloud and pay only for the time they use it.

Google announced a cloud supercomputer service of its own earlier this year, powered by the company’s custom TPU processors. While Azure did announce an HPC cloud service as early as in 2017, it said it would offer the Cray supercomputer infrastructure on a “customer-specific provisioning” basis, meaning it would deploy HPC systems for large customers on a case-by-case basis.

The Azure service announced Monday is a more traditional public cloud service, where a customer can order the number of instances they need, see them spin up quickly, deploy and run their software on them, and spin them down when finished.

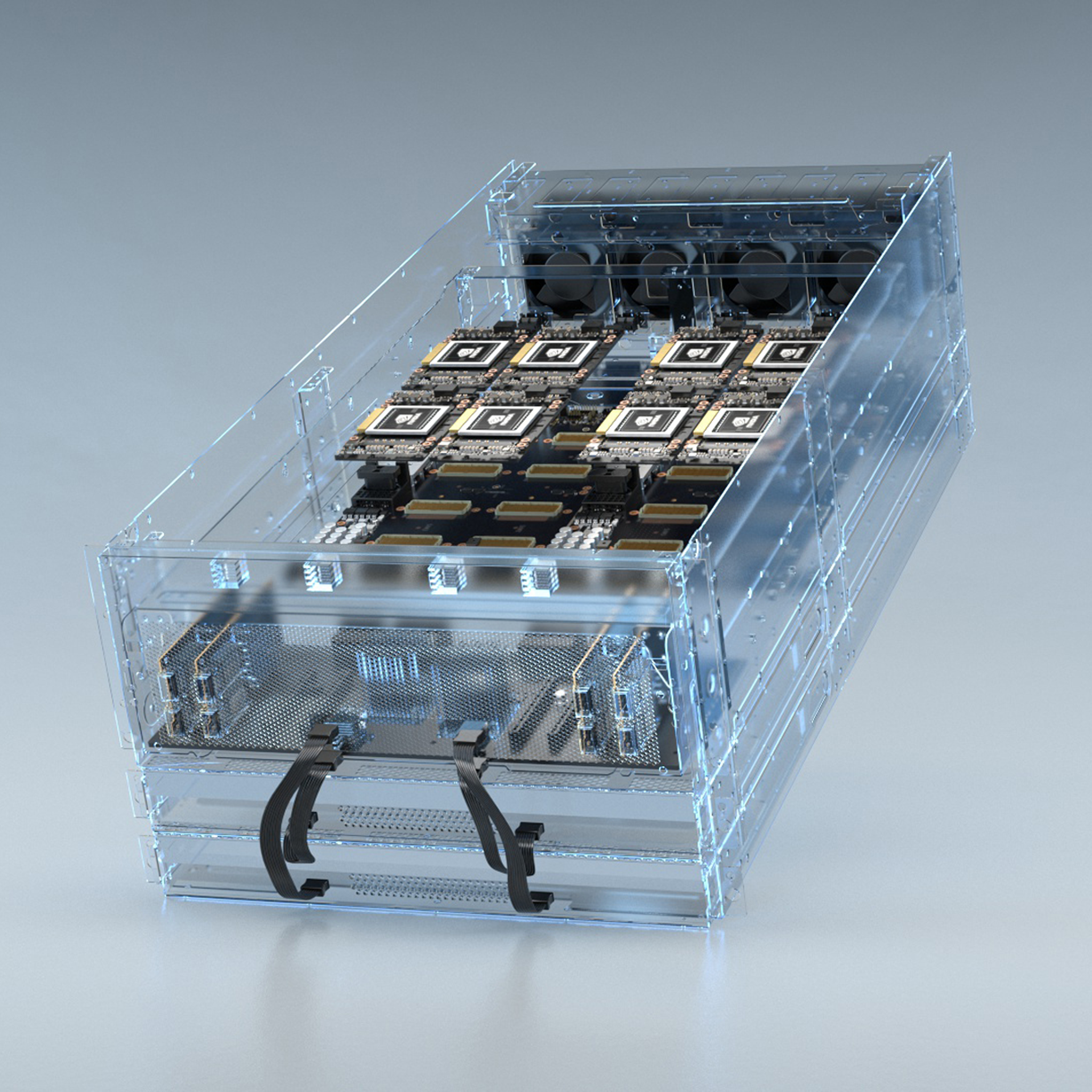

Currently available in preview only, the new instances are called NDv2. They are powered by Nvidia V100 Tensor Core GPUs and interconnected by an InfiniBand network designed by Nvidia-owned Mellanox, capable of linking together up to 800 GPUs, according to Nvidia.

A single instance is powered by eight GPUs, which is the same number of GPUs used in Nvidia’s DGX-1 supercomputer for AI.

Using a cluster of 64 NDv2 instances, Microsoft and Nvidia engineers trained a BERT conversational AI model in about three hours, Nvidia claimed.

Also at the show, Nvidia announced a new reference architecture for GPU-accelerated Arm servers. Arm chipmakers Ampere and Marvell, now Hewlett Packard Enterprise-owned supercomputer maker Cray, Fujitsu, and Arm itself collaborated with Nvidia on the design.

Earlier this year, Nvidia said its CUDA-X software library for GPU-accelerated computing would soon support Arm CPUs. On Monday, it released a preview version of its “Arm-compatible software development kit.”

Reference architecture for an Nvidia GPU-accelerated Arm server

Arm fleshes out the variety of CPU architectures Nvidia supports, which already includes x86 chips by Intel and AMD, as well as IBM’s Power architecture.

Finally, on Monday at SC19, Nvidia introduced Magnum IO, a suite of software designed to get rid of storage and IO bottlenecks in AI and HPC clusters to speed them up, claiming as much as 20fold performance improvement.

It achieves the improvement by enabling GPUs, storage, and networking devices in a cluster to bypass CPUs when exchanging data, thanks to a technology called GPUDirect.

Magnum IO is available now, but a piece called GPUDirect Storage, which enables researchers to bypass CPUs when accessing storage, isn’t available outside of a select group of “early-access customers.” Nvidia is planning a broader release of GPUDirect Storage for the first half of 2020.