Cerebras Systems wowed the tech press this week with its announcement of what it claimed to be the world’s largest and fastest processor, but the AI hardware startup has even bigger plans for this fall, when it expects to debut a server powered by its super-sized chip and built specifically for artificial intelligence applications.

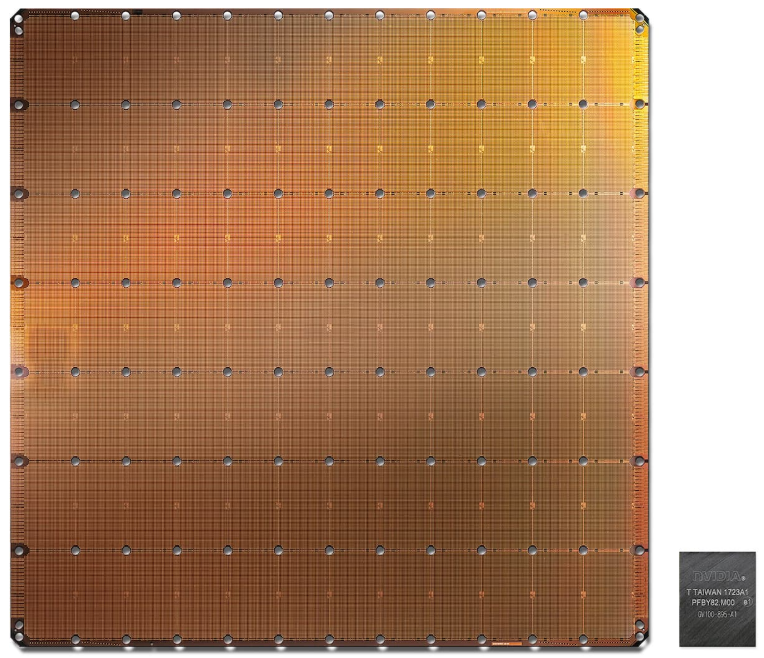

The company, based in Los Altos, California, said its Wafer Scale Engine (WSE) chip was 56.7 times larger than the largest GPU on the market and featured 3,000 times more high-speed on-chip memory and 10,000 times more memory bandwidth.

The server Cerebras plans to announce in November will enable companies to run AI workloads in data centers at unprecedented speeds, Andrew Feldman, the company’s CEO and co-founder, told Data Center Knowledge in an interview.

In 2012, Feldman sold SeaMicro, another server maker he founded, to AMD for $334 million. Afterwards, he spent several years leading AMD’s effort to build an Arm server business, an effort that eventually failed.

The Cerebras server, which will be sold to enterprises and large cloud providers, is optimized for AI training and large-scale inference, Feldman said. In AI, deep learning algorithms must be trained on big data sets, and once trained, inference puts a trained model to work to draw conclusions or make predictions.

“The hard part is the training. It takes huge amounts of compute, and today it’s expensive, time-consuming, and limits innovation,” Feldman said. “We reduce AI training time from months to minutes and from weeks to seconds, and that means researchers can test thousands of new ideas in the same period of time.”

The lucrative AI hardware market Cerebras is targeting is currently dominated by machine-learning systems that run on GPUs by Nvidia.

“It is an extremely aggressive and audacious plan, because they are building something that has not been successfully built – a wafer-scale chip,” Tirias Research principal analyst Kevin Krewell told us. “It’s required them to invent a whole raft of new technologies for handling the die, the cooling, and feeding power into it, and therefore, it pushes the envelope. It’s a moonshot kind of thing.”

Manufacturers typically etch multiple processors on a single silicon wafer. Some of the individual chips often come out defective but producing several of them per wafer makes the loss margin acceptable from business perspective. Etching a single chip on a wafer leaves little room for manufacturing errors.

Cerbras says its WSE chip is 56 times the size of the largest GPU on the market

Krewell said he has talked to competitors in the AI processor market who have alternatives to Cerebras’ approach that may scale even better. Cerebras has huge potential, provided its technology works as advertised, but it’s too soon to know, he said.

“We need to get people using it and get real benchmarks. We don’t know pricing on it yet and how many they can make. It’s also super important for them to come up with the right software to manage it and make efficient use of that big chip,” Krewell said. “There’s a fair amount of unknowns, but certainly the technology has a ‘wow’ factor.”

Nvidia’s AI Hardware in Startup’s Crosshairs

Cerebras’s WSE processor measures 8 inches by 8 inches and contains more than 1.2 trillion transistors, 400,000 computing cores, and 18GB of memory. In contrast, the Nvidia V100 GPU has 5,120 computing cores and 6MB of on-chip memory.

“This problem of building very big chips: people have been trying to do it for 60 years in our industry, and we are the first to succeed,” Feldman said.

The processor enables Cerebras to build a deep learning system that performs better than a system with 1,000 GPUs, the startup claims. More specifically, it can deliver more than 150 times the performance, use 1/40th the space and 1/50th the power of the Nvidia DGX-1 AI supercomputer, according to Cerebras.

Its upcoming server will have many other innovations as well, Feldman told us.

“Many of our patents are around system-level issues: how you deliver power, how you cool, how you deliver workloads,” he said. “It is a single system that will replace hundreds or thousands of GPUs,” he added.

Large potential customers are already testing Cerebras’ AI hardware, the company said. Feldman predicted that the new server will allow researchers to explore different types of neural networks that are impossible with existing technology today.

“These are larger networks and sparse networks. These are wide or shallow networks. These are networks that can’t be explored today, but with our equipment, now you can,” he said.

That means neural networks that are faster and deeper (with more layers), resulting in more accurate models, Krewell explained.