Nothing teaches IT leaders more about their systems than when something goes wrong. Just ask the IT team at GitLab.com, which suffered a 300GB loss of user data from its primary database sever on Jan. 31 after a technician made a simple data entry mistake when trying to wipe a PostgreSQL database directory.

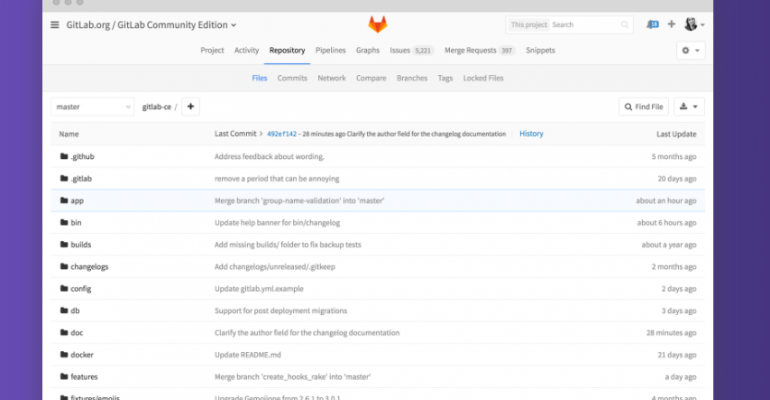

Instead of trying to wipe the directory on a secondary server, the technician's command entry referred to the primary server, wiping out the user data in the few seconds it took for the technician to realize his mistake, GitLab reported in a Feb. 10 blog post about the failure. GitLab offers several online and on-premises applications that allow users to code, test and deploy software projects.

The directory wipe was made in response to what was believed to be an increase in database load due to a spam attack. The increased load also turned out to be caused by a background job which was trying to remove a GitLab employee and their associated data after an account was flagged for abuse, the post continued. The spam attack and other load caused system lags on the secondary's replication process, which required the secondary to require re-synchronization.

Several initial repair attempts failed, which led an engineer to take the action that ultimately deleted the 300GB of data on the primary server and throw the company's free GitLab.com SaaS platform for application development into a standstill while repairs were made. Some 5,000 projects and 700 new user accounts were affected by the data loss, the company stated in its post. Customers of the company's paid GitLab Enterprise product, GitHost offering and of its self-hosted GitLab CE product were not affected by the outage or data loss.

The company quickly posted about the incident and apologized to users and customers for the down time and "unacceptable" data loss experienced in the disaster.

So what did the GitLab.com experience teach the company and how can those experiences benefit other users?

For GitLab, the incident provided some important lessons learned, including that a myriad of backup, disaster recovery and procedural improvements would be required to ensure that such a situation will not happen again, Tim Anglade, a spokesman for the company, told WindowsITPro.

The primary lesson from the incident is that "there's no such thing as a valid backup – there's only valid restores," said Anglade. "That's an old saying, but it applies to us."

During the database server performance drop, GitLab experienced multiple resource failures which technicians were not aware of until they cascaded into a larger failure. "We had backups that weren't be generated properly and others that couldn't be restored, which led to an operator doing a manual restore. That's the event which caused the problems."

The incident has also taught the company that it had inadvertently allowed its success to outgrow its critical IT procedures and systems, said Anglade. "GitLab.com for us started as a side business, which a lot of people started using it and we didn't account for that growth enough," he said. "Success itself can cause massive usage, which can create issues," such as the recent spam attacks that had been made on its servers. The company had become a target for spammers and has now learned it needs to set up systems to better fight such attacks in the future.

Other lessons garnered from the incident involve factors outside of engineering, such as the need to provide continuous training for the people who are charged with maintaining the company's IT infrastructure, said Anglade.

"A lot of engineering is people who interact with the technology and build the technology and that can lead to failures," he said. "We need to better prepare people properly and have improved procedures." New training and hiring is being done to help the company's workers stay ahead of needed system improvements, he added.

One thing GitLab did well after the data loss incident is that its leaders communicated quickly and openly with its users and customers to explain what had happened and how it was being fixed and prevented in the future, said Anglade. Still, the company could have done a better job in more clearly identifying which specific users were affected by the data loss and which were not, so that unaffected customers would have been better informed and less concerned, he added.

GitLab.com did lose some online application development projects and users after the server incident but has reportedly not lost any paying customers with its other services since the incident, said Anglade.

The company certainly won't be the last to experience this kind of systems failure. Incidents like these happen regularly inside companies because complex IT systems do fail.

And that's a lesson worth remembering, because even the best-laid IT plans can later be found to have vulnerabilities no one previously imagined. GitLab.com learned that serious lesson on Jan. 31. Now's the time to do some extensive self-evaluations of your company's own IT infrastructure to prevent similar problems in your future.