Artificial intelligence as a technology is rapidly growing, but much is still being learned about how AI and autonomous systems make decisions based on the information they collect and process.

To better explain those relationships so humans and autonomous systems can better understand each other and collaborate more deeply, researchers at PARC, the Palo Alto Research Center, have been awarded a multi-million dollar federal government contract to create an "interactive sense-making system" that could answer many related questions.

The research for the proposed system, called COGLE(Common Ground Learning and Explanation), is being funded by the Defense Advanced Research Projects Agency (DARPA), using an autonomous Unmanned Aircraft System (UAS) test bed but would later be applicable to a variety of autonomous systems.

The idea is that since autonomous systems are becoming more widely used, it would behoove humans who are using them to understand how the systems behave based on the information they are provided, Mark Stefik, a PARC research fellow who runs the lab's human machine collaboration research group, told ITPro.

"Machine learning is becoming increasing important," said Stefik. "As a consequence, if we are building systems that are autonomous, we'd like to know what decisions they will make. There is no established technique to do that today with systems that learn for themselves."

In the field of human psychology, there is an established history about how people form assumptions about things based on their experiences, but since machines aren't human, their behaviors can vary, sometimes with results that can be harmful to humans, said Stefik.

In one moment, an autonomous machine can do something smart or helpful, but then the next moment it can do something that is "completely wonky, which makes things unpredictable," he said. For example, a GPS system seeking the shortest distance between two points could erroneously and catastrophically send a user driving over a cliff or the wrong way onto a one-way street. Being able to delve into those autonomous "thinking" processes to understand them is the key to this research, said Stefik.

The COGLE research will help researchers pursue answers to these issues, he said. "We're insisting that the program be explainable," for the autonomous systems to say why they are doing what they are doing. "Machine learning so far has not really been designed to explain what it is doing."

The researchers involved with the project will essentially have roles educators and teachers for the machine learning processes to improve their operations and make it more useable and even more human like, said Stefik. "It's a sort of partnership where humans and machines can learn from each other."

That can be accomplished in three ways, he added, including reinforcement at the bottom level, using reasoning patterns like the ones humans use at the cognitive or middle level, and through explanation at the top sense-making level. The research aims to enable people to test, understand, and gain trust in AI systems as they continue to be integrated into our lives in more ways.

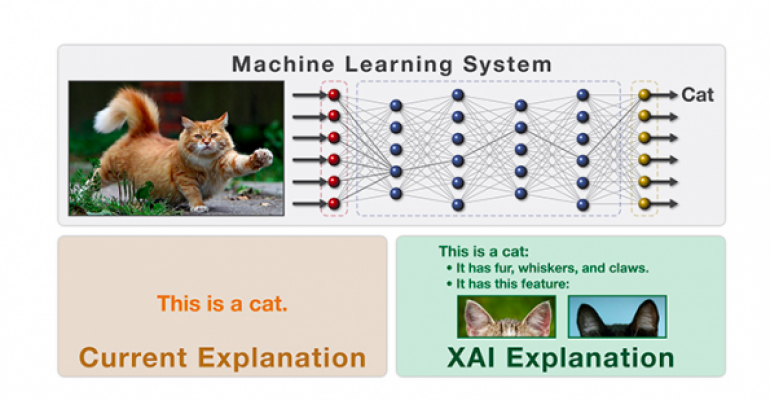

The research project is being conducted under DARPA's Explainable Artificial Intelligence (XAI) program, which seeks to create a suite of machine learning techniques that produce explainable models and enable human users to understand, appropriately trust, and effectively manage the emerging generation of artificially intelligent partners.

PARC, which is a Xerox company, is conducting the COGLE work with researchers at Carnegie Mellon University, West Point, the University of Michigan, the University of Edinburgh and the Florida Institute for Human & Machine Cognition. The key idea behind COGLE is to establish common ground between concepts and abstractions used by humans and the capabilities learned by a machine. These learned representations would then be exposed to humans using COGLE's rich sense-making interface, enabling people to understand and predict the behavior of an autonomous system.